Demo to preview the settings

Demo page: https://ezcodedemo3.bubbleapps.io/nsfw

Introduction

Quickly identify given image or gif if it is sensitive content or nsfw (not safe for work ) using pre-trained AI

The plugin categories image probabilities in the following 5 classes:

- Drawing - safe for work drawings (including anime)

- Hentai- hentai and pornographic drawings

- Neural - safe for work neutral images

- Porn - pornographic images, sexual acts

- Sexy - sexually explicit images, not pornography

Try on Demo page: https://ezcodedemo3.bubbleapps.io/version-test/nsfw

Each category will have a percentage from 0% to 100% of possibility that the image is from particular category.

Check uploaded images/gifs by user before using them.

This plugin is a tool for identifying NSFW but Bubble's terms still apply on acceptable content.

You may not use this plugin in violation with Bubble's acceptable use policy (https://bubble.is/acceptable-use-policy) or branding guidelines (https://bubble.is/brand-guidelines)

Disclaimer

Keep in mind that NSFW isn't perfect, but it's pretty accurate (~90% from test set of 15,000 test images). It is ok that some images can get a few percents in not relevant categories.

Best practice is to combine results. For example, if each of category hentai,porn and sexy have less than 20% then it is safe to use even if the image have let's say 60% in drawing category and this is not drawing.

Don't run more that 1 test at the time otherwise your processor will be overloaded and the page may freeze or other unexpected errors can appear.

This plugin is a tool for identifying NSFW but Bubble's terms still apply on acceptable content.

You may not use this plugin in violation with Bubble's acceptable use policy (https://bubble.is/acceptable-use-policy) or branding guidelines (https://bubble.is/brand-guidelines)

Plugin Elements Properties

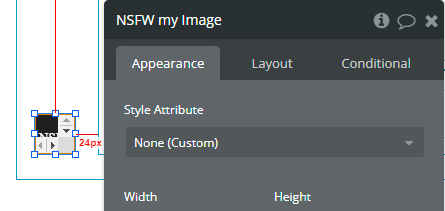

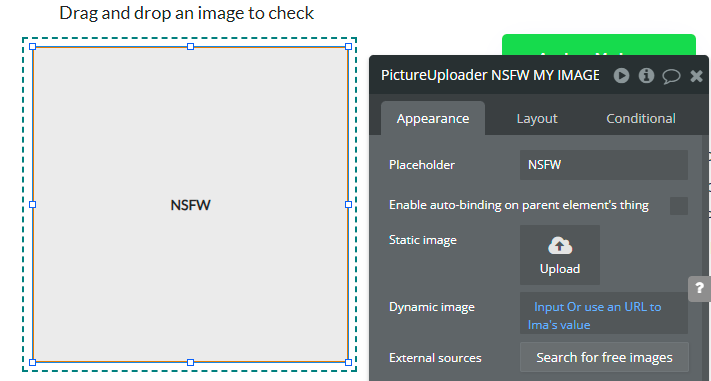

This plugin has one visual element which can be used on the page: NSFW.

NSFW

Identify sensitive or NSFW (not safe for work) content.

Element Actions

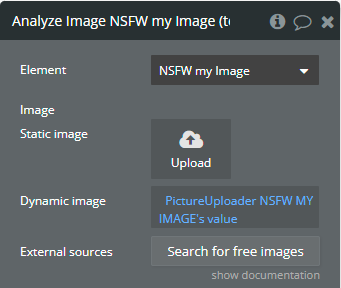

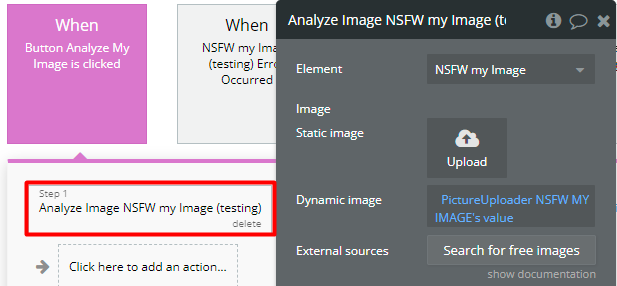

- Analyze Image - Check content from a static image.

Fields:

Title | Description | Type |

Image | Upload an image or insert a link to it. | image |

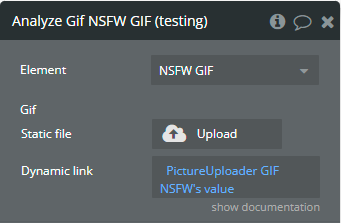

- Analyze Gif - Check content from a dynamic image.

Fields:

Title | Description | Type |

Gif | Upload a gif file or insert a link to it. | file |

Element Events

Title | Description |

Error Occurred | This event is triggered when an error occurred. |

Image Checked | This event is triggered when the image was checked. |

Element States

Title | Description | Type |

Instance Error | The error that occurred in the checking process. | text |

Porn Probability | The porn content probability in percentages. | number |

Sexy Probability | The sexy content probability in percentages. | number |

Hentai Probability | The hentai content probability in percentages. | number |

Neutral Probability | The neutral content probability in percentages. | number |

Drawing Probability | The drawing content probability in percentages. | number |

Is Loading | Indicates if checking is in progress. | yes / no |

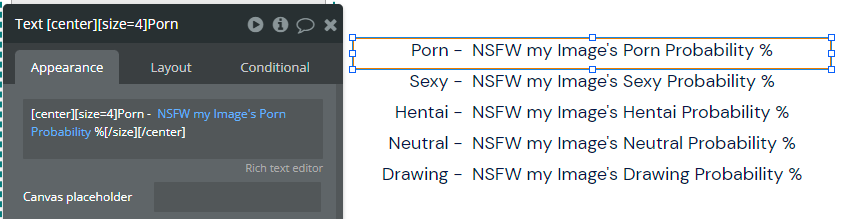

Workflow example

How to check an image

In this example, it is represented how is used NSFW element for image checking.

- On the page, it is placed an NSFW element.

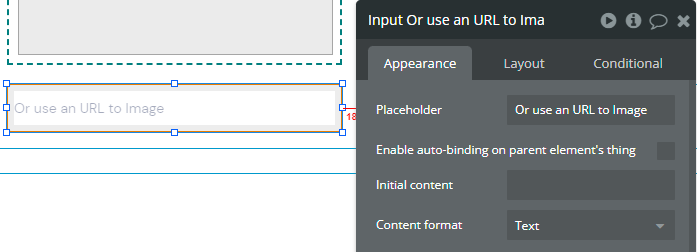

- For file input, are used Input and Picture Uploader elements.

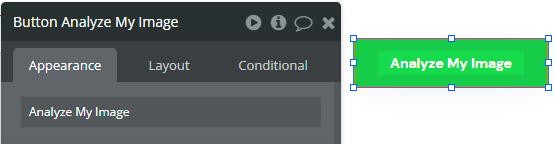

- For start checking, it is used a Button element.

- In the workflow, when the button is clicked then the Analyze Image action is called.

- For results displayed, on the page, are used some Text elements that are using the NSFW element states.